Autonomous Land Vehicle In a Neural Network

This Project is no longer active.

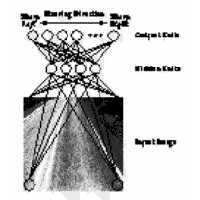

ALVINN is a perception system which learns to control the NAVLAB vehicles by watching a person drive. ALVINN’s architecture consists of a single hidden layer back-propagation network. The input layer of the network is a 30×32 unit two dimensional “retina” which receives input from the vehicles video camera. Each input unit is fully connected to a layer of five hidden units which are in turn fully connected to a layer of 30 output units. The output layer is a linear representation of the direction the vehicle should travel in order to keep the vehicle on the road.

Displaying 13 Publications