Gaze Estimation

Associated Lab:

Face Group

This Project is no longer active.

This Project is no longer active.

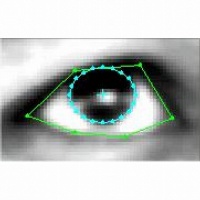

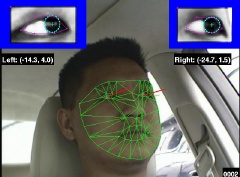

Passive gaze estimation is usually performed by locating the pupils, and the inner and outer eye corners in the image of the driver’s head. Of these feature points, the eye corners are just as important, and perhaps harder to detect, than the pupils. The eye corners are usually found using local feature detectors and trackers. On the other hand, we have built a passive gaze tracking system which uses a global head model, specifically an Active Appearance Model (AAM), to track the whole head. From the AAM, the eye corners, eye region, and head pose are robustly extracted and then used to estimate the gaze. See here for more details of our AAM tracking algorithms. See the movies below for examples of our results.

Movie of drivers head from interior camera |

Corresponding movie of external scene |

Displaying 2 Publications

current staff

past head

- Simon Baker

past staff

- Takahiro Ishikawa

past contact

- Simon Baker