PhD Speaking Qualifier

May

Meeting ID: 942 4671 0665

Passcode: jkhzoom

Abstract:

Photorealistic rendering of dynamic humans is an important capability for telepresence systems. Recently, neural rendering methods have been developed to create high-fidelity models of humans and objects. Some of these methods do not produce results with high-enough fidelity for driveable human models (Neural Volumes) whereas others have extremely long rendering times (NeRF). In this work, we propose a novel compositional 3D representation that combines the best of previous methods to produce both higher-resolution and faster results. Our representation bridges the gap between discrete and continuous volumetric representations by combining a coarse 3D-structure-aware grid of animation codes with a continuous learned scene function that maps every position and its corresponding local animation code to a view-dependent emitted radiance and local volume density. Differentiable volume rendering is employed to compute photo-realistic novel views of the human head and upper body as well as to train our novel representation end-to-end using only 2D supervision. In addition, we show that the learned dynamic radiance field can be used to synthesize novel unseen expressions based on a global animation code. Extensive experiments demonstrate that our method can achieve higher fidelity photorealistic rendering and improved capacity on fitting videos compared to previous work.

Research Qualifier Committee:

Jessica Hodgins (advisor)

Jun-Yan Zhu

Matthew O’Toole

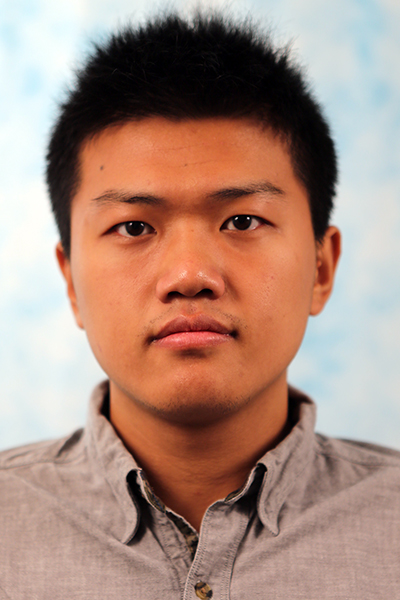

Chaoyang Wang