Abstract:

With the growing accessibility of humanoid hardware and rapid advances in foundation models, we are entering an era where achieving general embodied intelligence is within reach—enabling humanoid robots to perform a wide range of tasks in human-centric environments. Despite significant progress in language and vision foundation models, controlling humanoids with high degrees of freedom to perform agile, dexterous, and versatile tasks remains a challenge.

In this talk, I will present my pathway toward developing a foundation control model that scales along two critical dimensions: task generalizability and agile, dexterous control. To address task generalization, I will introduce a progression of representation learning—from WoCoCo, which conditions on contact and task sequence, to HOVER, a general neural interface designed to scale control policies across tasks and command interfaces. For agility and dexterity, I will present methods that span from sim-to-real adaptation to real-to-sim-to-real transfer. In particular, I will highlight ASAP, our novel approach that enables high-agility control for single tasks by leveraging residual policy. Finally, I will demonstrate how the coherent integration of these two directions leads to general and agile control across embodiments, as exemplified by AnyCar, a cross-embodiment control system capable of performing diverse and dexterous wheeled maneuvers.

Committee:

Prof. Guanya Shi (advisor)

Prof. John Dolan (advisor)

Prof. Changliu Liu

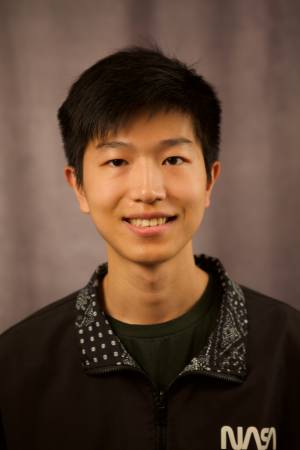

Xiaofeng Guo