Recently published work, “Autonomous Aerial Cinematography In Unstructured Environments With Artistic Decision-Making”, outlines a flying robotic system for filmmaking with creativity firmly in the driver’s seat.

From the paper:

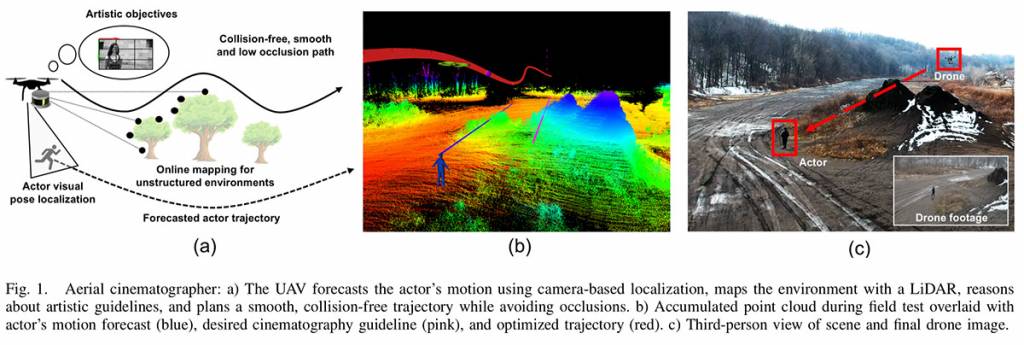

Aerial cinematography is revolutionizing industries that require live and dynamic camera viewpoints such as entertainment, sports, and security. However, safely piloting a drone while filming a moving target in the presence of obstacles is immensely taxing, often requiring multiple expert human operators. Hence, there is demand for an autonomous cinematographer that can reason about both geometry and scene context in real-time. Existing approaches do not address all aspects of this problem; they either require high-precision motion-capture systems or GPS tags to localize targets, rely on prior maps of the environment, plan for small time horizons, or only follow artistic guidelines specified before flight.

In this work, we address the problem in its entirety and propose a complete system for real-time aerial cinematography that for the first time combines: (1) vision-based target estimation; (2) 3D signed-distance mapping for occlusion estimation; (3) efficient trajectory optimization for long time-horizon camera motion; and (4) learning-based artistic shot selection. We extensively evaluate our system both in simulation and in field experiments by filming dynamic targets moving through unstructured environments. Our results indicate that our a system can operate reliably in the real world without restrictive assumptions. We also provide in-depth analysis and discussions for each module, with the hope that our design tradeoffs can generalize to other related applications.

[embedyt] https://www.youtube.com/watch?v=ookhHnqmlaU[/embedyt]

Paper to be published at IROS 2019 (https://arxiv.org/abs/1904.02319)

Paper to be published at IROS 2019 (https://arxiv.org/abs/1904.02579)

Paper published at ICRA 2019 (https://arxiv.org/abs/1903.11174)

Paper published at ISER 2018 (https://arxiv.org/abs/1808.09563)